Llama Travel 3

AI • Agents • LLM

September 10

When AI agents need to escalate to a human agent.

We made it! It's the final part on

Llama Travel and their AI agent

Llamita.

For this chapter, we’ll go over the things

Llamita can’t solve and how it can tag

in the customer engagement team—plus what

data it can collect so it can learn and

improve.

If you’re ready to land this case,

fasten your seatbelt! And if you need a quick

refresher before we leave high altitude, you can

read my other articles on LLMs and

AI agent basics.

Check out my earlier posts:

Llama Travel 1,

Llama Travel 2,

LLM Champion, and

LLM Master.

Let's go!

It's nightime and now we give Llamita a different hat

3) Escalation

So in the previous articles, we discussed how the AI

agent Llamita would assess what the user

needed and how it can call in other agents to handle

the request.

In the scenarios discussed, Llamita was able

to identify correctly and solve the issue. Now, we

are focusing on the cases where we

definitely need human expertise and empathy

to handle the situation.

We will divide this into three parts:

3.1 Escalation cases

3.2 Post and pre escalation

3.3 General tips.

3.1 Escalation cases

What cases should Llamita

not touch?

Quick answer: it depends, mostly on what the

AI agent’s training has prepared it to

handle. For example, Llama Travel might have

launched the agent in parallel while developing a

new premium package or feature.

So Llamita could answer basic questions, but

anything more in-depth would need a

human representative to provide details while

the documentation catches up.

This also applies to tracking cases manually; for

instance, if an airline is experiencing lots of

booking issues and strikes, the

customer engagement team wants to be hands-on

for anything related to it.

Editing Llamita’s original prompt to ask it

to escalate cases related to these specific topics

can help.

However, regardless of training, there are some obvious areas where we need special knowledge. Anything related to legal issues, security situations, edge cases, and users being explicit in their interactions requires human intervention.

Why? Let’s break those situations down:

-

Legal issues

AI is useful in many situations but an LLM is not a lawyer or legal expert. It’s not ideal if Llamita makes a mistake about a ticket price, but it’s a whole different world if it makes a mistake with legal repercussions.

Best case is to send this to a person who knows. -

Security issues

Similar to legal issues, imagine a customer’s credit card has a potential breach. Llamita can freeze the account and notify support, but a human agent should contact the individual to confirm actions and offer guidance tailored to the situation. -

Edge cases

As stated before, Llamita only knows what it knows. Identifying edge cases can be difficult, and you don’t want to escalate everything.

Set up Llamita to try handling the case and collecting as much information as possible before sending to a human. -

User being explicit

If the user is highly distressed (e.g., using ALL CAPS, which is the equivalent of yelling, or directly threatening legal action), we need a human to step in. While AI can identify emotions, it does not truly understand them—best to leave it to the people experts.

Here is an example summary of Llamita sends to the human agent when it escaltes the case

3.2 Post and Pre Escalation

Ok, so Llamita needs to send the case to the

right person. Now we need to prepare the human agent

and take notes for future cases.

Llamita can call another agent to make a

summary of the interaction with all relevant

information. That same agent can collect data from

other agents—for example, the number of attempts the

user has made to solve the issue, detected intents,

user’s profile info, how the user is feeling, and

any other relevant data to prepare the human

agent.

Basically, think:

what would you need to know to jump in and

solve the issue?

With the summary ready, we can hand it over to a

person to handle. The last decision

Llamita needs to make is: who to send it to?

Does the case need a specialist?

For legal, security, or special account issues, we

send it to the corresponding Accounts, Compliance,

or Technical team. For general queries, we send it

to the general customer support team, who can

clarify details with experts later if needed.

We also need to consider language. Let’s say for

Llama Travel we have in-house staff who speak

English, Spanish, and Portuguese, but the customer

is speaking German with Llamita. Oops! We can

be transparent and tell the user we will forward

their case to available languages and let them pick

one, or offer AI translation in their interaction

with the support team.

Since the situation couldn’t be handled solely by

AI, we want to take notes and flag the conversation

for the people training the AI agent.

From reading the conversation, I would adjust

documentation and prompts to better handle this

scenario.

I would also want to look at general stats for

Llamita:

- Does user sentiment change during interactions?

- How many messages are exchanged until a query is resolved?

- What is the chatbot abandonment rate?

- What correlations can we draw from the data?

- What are common cases where issues aren’t resolved?

- What issues receive negative feedback?

- What percentage of conversations escalate to humans?

- How quickly are cases being picked up and resolved post-escalation?

Finding patterns in the data helps identify

high-priority topics for improving

Llamita quickly. While it operates

independently for simple cases, Llamita still

needs human help to keep getting better and

better.

Also, make notes for false negatives,

scenarios when AI must say no, but users insist on

human contact. These are often about sensitive

topics like refunds, where Llamita can only

reassure and provide information.

This is still valuable data, so it’s important to

ensure cancellation policies and terms are as clear

and explicit as possible.

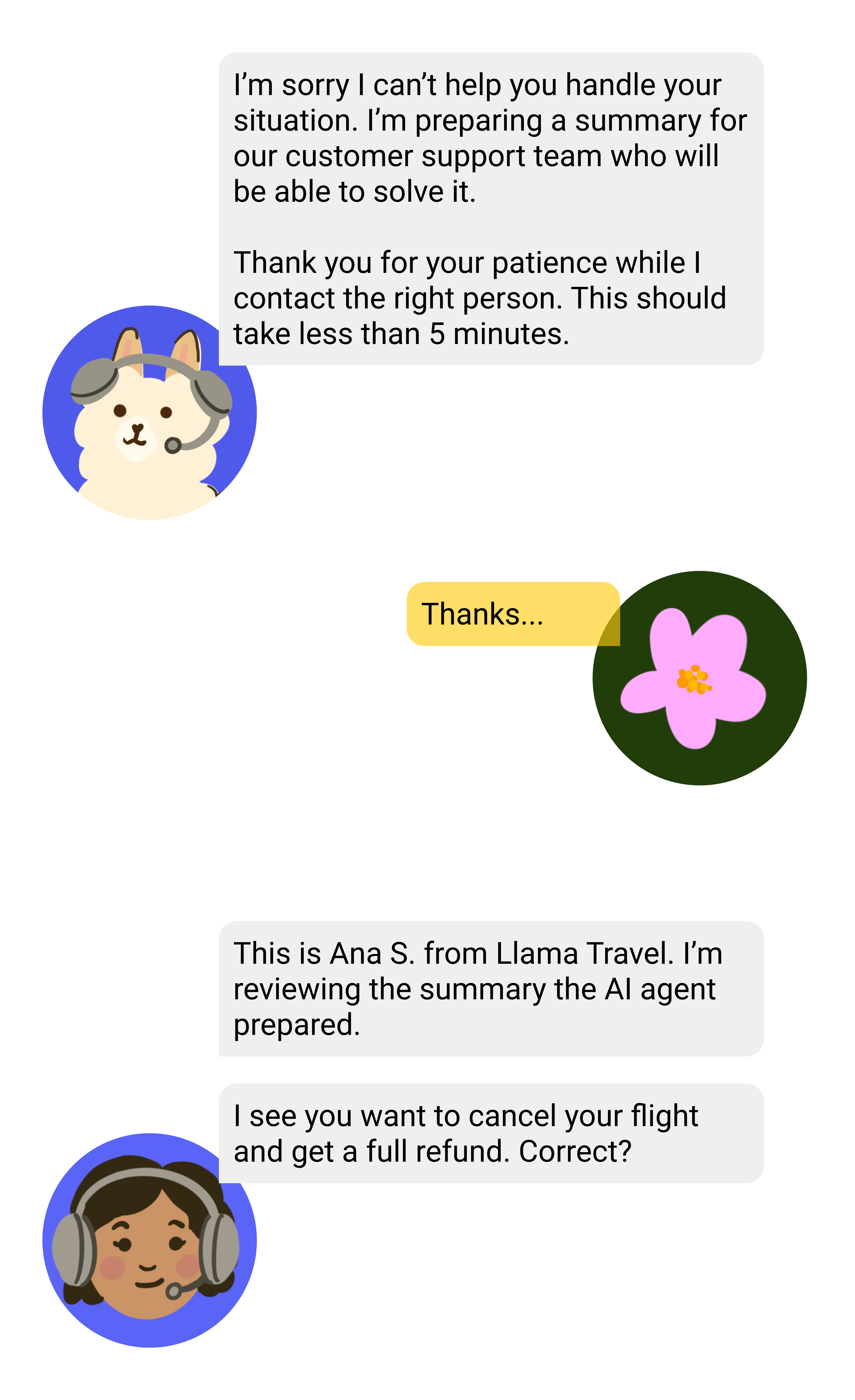

Llamita is transparent about how it sends the case to a human agent.

3.3 General Tips

Creating strong customer support agents is very similar to being a customer support agent yourself. Here is where I have some personal experience that comes in handy. Surprise! I was the secret agent all along!

In one of my previous lives I worked at a call center providing support to cable TV customers. It wasn’t for very long and it was my first job in college, but I learned a lot very quickly. The call center recruited in groups and trained us for about a month. I won’t go into details, but I remember feeling both prepared and unprepared by the time we hit the call floor.

Even after a month practicing new concepts, product specs, call scenarios, you can’t possibly cover every problem customers throw at you. The job required empathy and care. Here are some of the key lessons I picked up:

- Be empathetic about the situation. The first thing was always reassuring the user I’d do everything I could to help, that I understood their problem.

- Be transparent to the customer. When I was solving an issue, like adding info to their account, I’d tell the customer what I was doing. This avoided long silences and helped them feel things were being solved.

- Know when to escalate to a supervisor. When things went beyond me, I had to tag in someone who knew more.I wasn’t the expert, I was the first point of contact starting things off with a good first impression.

These learnings map well to building AI support agents:

- Program agents to reassure users and demonstrate understanding by summarizing their issue and offering help.

- Let agents provide status updates during issue resolution to maintain trust and transparency.

- Set boundaries for agent escalation: when a case is complex or emotional, route to human agents for deeper support.

For more details you’ll have to tune in for the next article! I recently got a GenAI customer support certificate, so we’ll put that new knowledge to good use!

It was me in the animal print all along!

I hope you liked this deep dive into building good AI agents. It’s always easier to understand new abstract concepts when we match them to an example, and for me, it’s even better when we add happy little animals to our analogies.

We worked on a specific case for a travel agency,

but customer service principles can be

generalized to other products.

It’s always about being empathetic and kind

to the customer and

knowing your product up and down, tail to

bottom.

Those rules apply whether you are selling flights to

Lima or flower bouquets. Be nice and wise, and you

will build the best agents in the world.

I have to confess there are also other areas of

knowledge I’ve drawn from to write these

articles.

Training an LLM was very useful to think about

information hierarchy and documentation, but my work

in diplomacy has been super useful.

I think in a future blog I will focus on

how to say no without saying no and telling

someone they are wrong in the most polite way

possible. Super useful skills for agents and for

life.

Just like I said before, know your product and be kind to the user, because you might end up being on the other side of an AI agent very soon.

That’s Enough About Me!

What do you think? What should I focus on next?

Which was your favorite animal drawing? I loved Llamita with the Chullo hat. Which was your favorite article? Since I wrote them, I love them all.

Let me know—shoot me an email! 😊

📩

sifuentesanita@gmail.com