LLM Master

AI • Data • LLM

August 14

A tale about training an LLM. It's very

similar to training Pokémon.

LLMs, or large language models, are

one of the big AI tools right now.

Are they good? Are they bad? Yes and no.

Like any tool, it depends on how it's being used.

Will AI replace us? Unfortunately, for some

of us, yes. New technological advancements come hand

in hand with replacing 'repetitive' work. You

can find endless examples throughout history. Ever

since we got creative and started adding wheels to

carts, we decided not to stop and find other ways to

reduce work.

If we can find a way to be lazy, we will.

What can we do now? Babe, wake up, it's time to use AI. We can only catch up; don't stay too far behind. If you have the chance to test a new technology early on, go for it. Even if this tool doesn't kick off completely, knowledge is never wasted.

I speak from experience because I found myself in this very situation in early 2024. I needed to train an LLM.

Did I know about LLMs back then? No, babe. It

was time to learn.

Let me tell you about it.

Fun fact: Misdravous is my favorite Pokémon

1) What is an LLM and how is it a Pokémon?

First, a disclaimer. My knowledge is mostly on the content, training, and documentation side. Implementation was done by engineers. So, in this case I'm not a super technical Pokémon trainer, but I was able to win some battles.

LLMs are artificial intelligence tools that use large amounts of data to understand and generate natural human language. They are good at predicting what comes next, so they can help you improve your writing, bounce ideas, and make calculations. However, they aren't all-powerful. They are limited by the data used to train them. Plus, they inherit any implicit biases that those data pools come with.

We can think of LLMs as having different 'parts':

- Corpus = the data they draw from

- Pre-prompt = how they process this data

- Output = the final outcome they give you

Also, you want to make sure the output is relevant and valuable, so there is also quality evaluation involved in the process.

I theorize they are like Pokémon because they can be powerful, but they depend on you to train them properly, and they can only perform the limited set of moves you let them learn.

Now let's see if my analogy works:

- Documentation = the moves your Pokémon has and its level

- Pre-prompt = how you train the Pokémon

- Output = the Pokémon using its training and attacks; basically how it fights

- QA = making sure it's winning battles and getting ribbons

Is this my best analogy? Pokémon fans say yes.

Let's go over my Pokémon training strategy now.

Image credit: Where to find Sneasel in Pokemon Crystal? by Dragonite Trainer

A Pokémon battle between Gyarados and another of my favorites, Sneasel.

2) Documentation

Or selecting what moves to teach your Pokémon.

Before making any decisions about what types of documents you need to ‘feed’ your LLM, you need to think of the use case. What kinds of battles will your LLM fight? Most likely, your LLM will already have access to a large pool of information collected from the internet. Think of what ChatGPT already knows.

But this pool, however vast, is limited. For my use case, it had very little relevant information. I needed my LLM to answer product-specific questions for the Freeletics app. The untrained model knew what Freeletics was, but had no information on the different tabs or exclusive workouts.

What did I do? I started curating information to feed it. I divided the information into two large buckets: internal sources and external sources. I also wanted to influence the tone of how it spoke about fitness, so I curated Freeletics articles that were informative and descriptive. I also researched the most frequent fitness questions people asked online and wrote four pillar articles to guide the LLM’s tone of voice.

The biggest part of the work was adding product specific information . This involved pulling data from the backend and other sources, and translating it into a format the LLM could understand.

At first, I thought that writing in a more casual, human way would work best. But after trial and error, I found the best formula: smaller chunks of information that were clearly labeled. Basically, think bullet points instead of large paragraphs.

This became especially useful when I moved on to the next part—training the Pokémon to best use its moves.

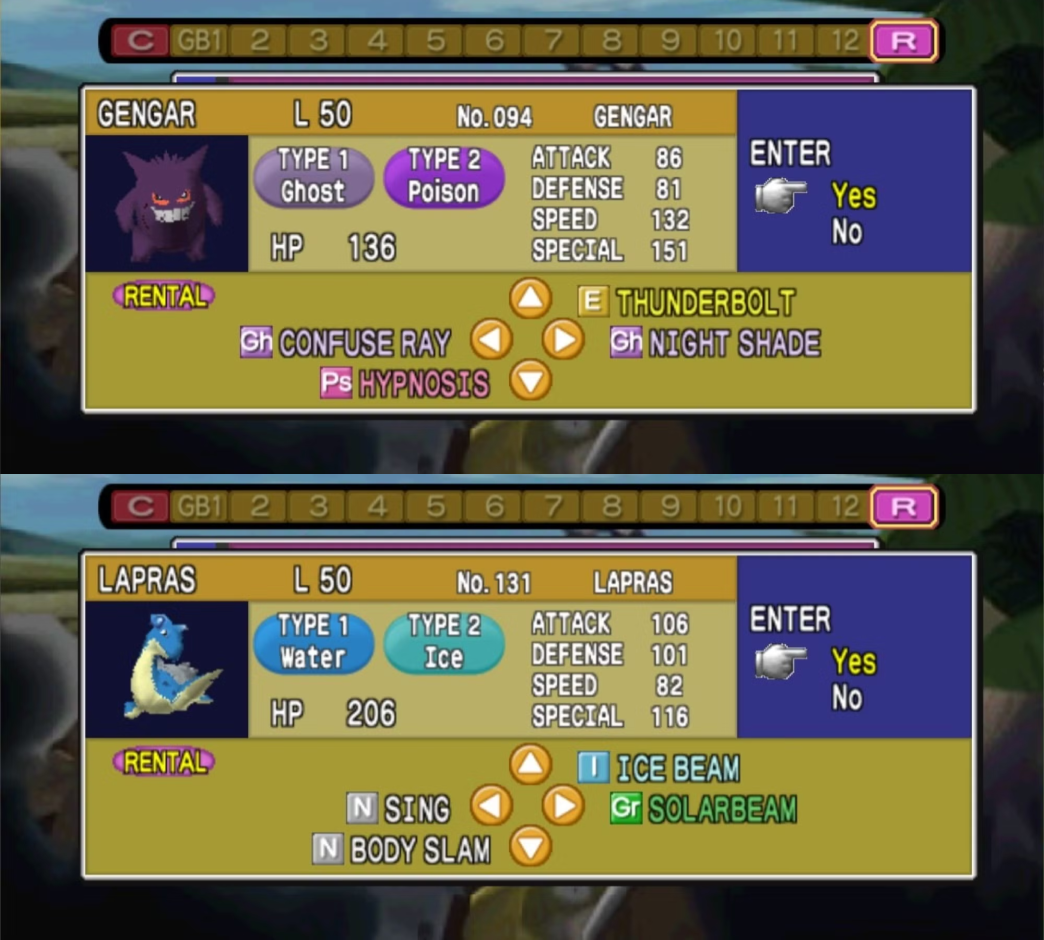

Image credit: Pokemon Stadium: 10 Best Pokemon, Ranked by DualShockers

My sweet Lapras and Gengar and their move set in Pokémon Stadium

3) Pre-prompt

Or how you use your Pokémon in battle.

The pre-prompt tells the LLM how to behave. Here, specificity is your friend. You can tell the LLM to pretend to be a positive and encouraging fitness instructor, or a pesky Pokémon rival that’s going to push you to your limits.

This is where the labels I had specified in

the documentation became crucial.

The product manual had very specific internal

terms—for example, fitness plans were called

“training journeys”. However, new users might

not be familiar with that concept and could refer to

them with other generic names. I specified in the

prompt to identify these general terms and, when

responding to the user, to use our internal term so

that users would learn how to find this info in the

app.

Another benefit of breaking down the information was that it made it easier for future implementation in other languages. I wrote all the documentation in English because I wanted to avoid rewriting all the files into their localized target languages. Can you imagine going back and editing information in nine different files, and then sending each of those to translators to confirm the changes made sense? Nope. It was essential that the files stayed in English.

The plan I devised was to specify the internal term alongside its translations in the documentation and only localize the prompt. I broke down the prompt into sections and clearly documented what each part was meant to do. I even accounted for language-specific behaviors. For example, speaking to German users in a formal tone. I tested my process by translating the prompt into Spanish, then sat down with the localization team to discuss what extra nuances they could add to their localized versions.

Once the pre-prompts were translated, we tested the output with a set of frequently asked, translated questions to measure the success of the answers. The results were very positive!

How did I measure that exactly? With the help of QA!

Interaction with Coach+, Freeletics fitness LLM

4) QA

Or how you measure your Pokémon's success.

For this part, I collaborated with my dear friend, Liza (yes, the same glamorous QA engineer featured in a previous blog. read it here!).

We worked together to design a rubric to evaluate the LLM's answers. The score was based on the average of metrics such as relevancy, correctness, and personalization. We also added a quick feedback survey button so users could indicate whether the answer was helpful or not.

We were very mindful of data privacy and complying with GDPR laws, so we ensured all data was handled with care and fully anonymized before pulling random samples to evaluate.

This QA process helped me identify weak areas in the documentation and make iterative changes to improve. Analyzing trends and frequent questions also helped prioritize which topics to start with, making the process more streamlined and effective. I took notes on which battles my Pokémon wasn’t doing so great in and went back to level up.

I also got to share the work with an intern I mentored throughout the project, helping them grow their skills in documentation, data analysis, and presenting insights. I had to be very clear in documenting my processes and doing frequent check-ins to ensure we were working in sync and getting the best results from our data.

In the end, the project was very successful: 90% of respondents rated the answers as useful, and we increased activation rates by approximately 9% during A/B testing.

Ok, now I just took this as a chance to live a childhood dream. Apologies.

I hope this article demystifies how LLMs work and how you can train one. It’s a lot of information to consider, but it can be done with a good strategy. In fact, if you want to read the project’s results, you can check out the case study here.

I’ll be honest, this is a streamlined version of how the project was laid out. There are lots of extra considerations you have to make when dealing with new technologies. Saying that training an LLM can be done by one person is a bit of an overstatement. It takes a team to properly implement one, plus ongoing refinement to make sure you’re delivering the best value possible to users.

Things change very quickly on the front end of technology. Even in the last few months, we’ve seen how search behavior on Google has shifted. You don’t necessarily click links anymore because AI now provides you with a quick summary. This is one of the reasons I recommend that products invest in creating their own LLM. Consumers are now used to searching using natural language rather than just key search terms.

In the end, did I become a Pokémon Master? No.

But Ash Ketchum didn’t win the Pokémon League on his first try either. If Pokémon taught me anything about training LLMs, it’s that you have to be patient, train your Pokémon with care, and make the best choices possible to win the battle.

That’s Enough About Me!

What do you think? What should I focus on next?

Are you also a Pokémon fan? Maybe an AI enthusiast? Maybe both?

Let me know—shoot me an email! 😊

📩

sifuentesanita@gmail.com