LLM Training

→ Content strategy for training an LLM to deliver fitness guidance

🏆 Outcome: Launched feature → Increased activation rate

- Client/Team: Freeletics | Data Scientists, Developers, QA Engineer, UX/Product Team

- Timeline: 9 months

- Role: Project Lead | Content Strategy + Product Design

- Tools: Open AI, Google Suite, Miro, Figma

📐 Design process

🚩 Challenge

At the start of 2024, Freeletics set a new north star: build a fitness trainer in your pocket. To support this vision, the product team began exploring how to integrate Generative AI into the app experience.

After reviewing several use cases, we saw a clear opportunity: many users had recurring questions about the product itself. So we decided to build an AI fitness assistant—a chat-based experience where users could ask questions in natural language and get real-time answers.

This project was assigned to me. As the content designer and product content expert, I led the content strategy for training the assistant. I started by researching LLM technology, mapping content gaps, and aligning with developers, data scientists, and product stakeholders to ensure the answers were accurate, on-brand, and technically feasible.

-

💡Concept

A fitness trainer in your pocket -

🎯 Goal

Provide product and fitness advice to users -

⚠️ Considerations

New technology

Large amounts of data in different formats and languages -

✅ Solution

A content strategy that is flexible, fast and easy to implement

Interaction with Coach+, Freeletics fitness LLM

🧪 Approach

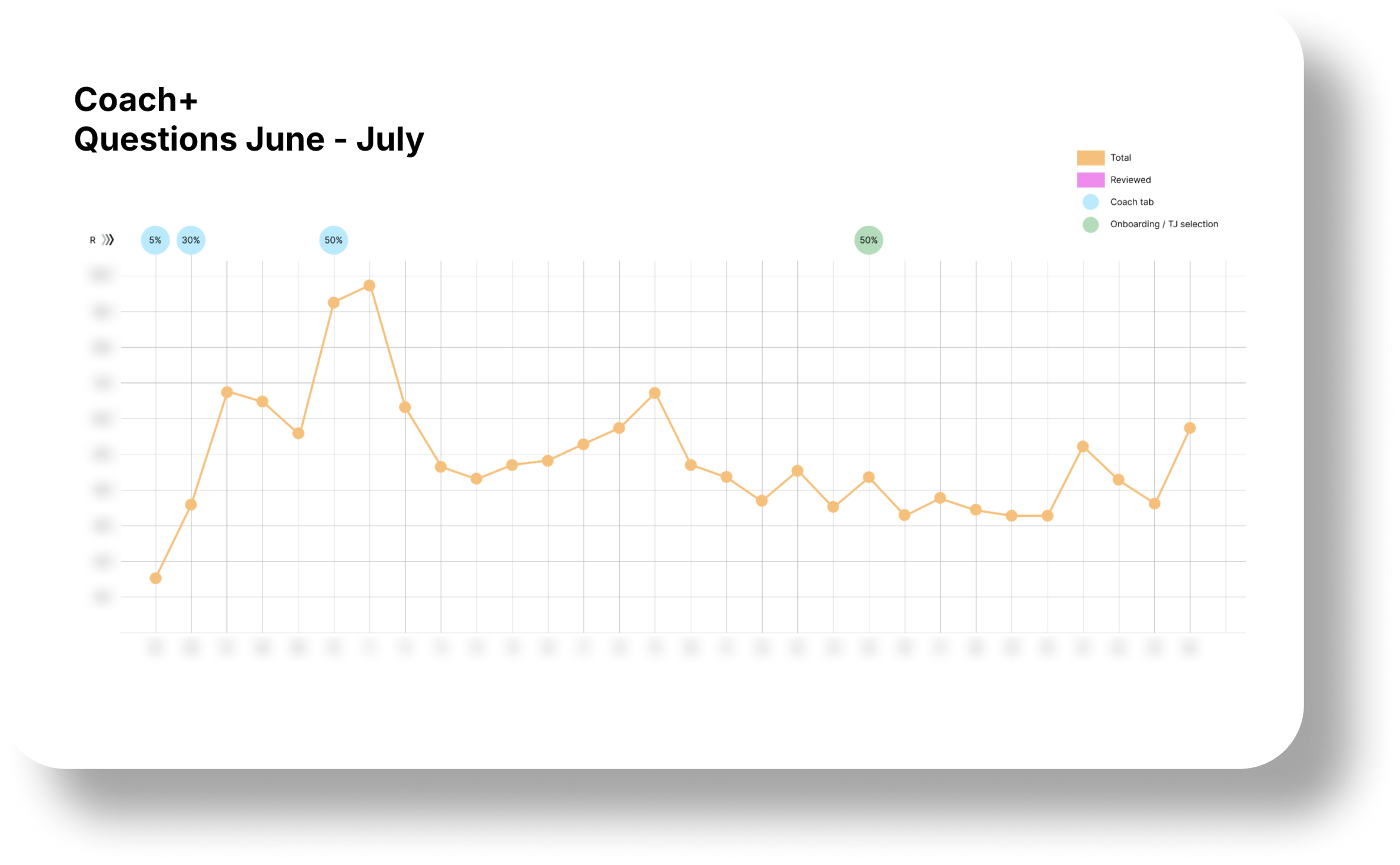

The product team strategically placed Coach+ within the app to maximize impact. With these use cases in mind, I mapped out the content needs to establish priorities, grouping information into two main categories: general fitness information and product-specific content.

I then broke down these broad categories into

manageable deliverables to maintain

focus and meet deadlines. Simultaneously, I

developed a quality framework to set clear response

standards, ensuring users would receive the best

content possible.

To support scalability, I established a clear

information hierarchy and created a

product concept map that

organized content for easy updates and seamless

integration of future features.

-

🗓️ Strategic Content Planning

- Identified key topics, content gaps, and mapped them to use cases

- Grouped content into 2 main categories: general fitness and brand-specific info

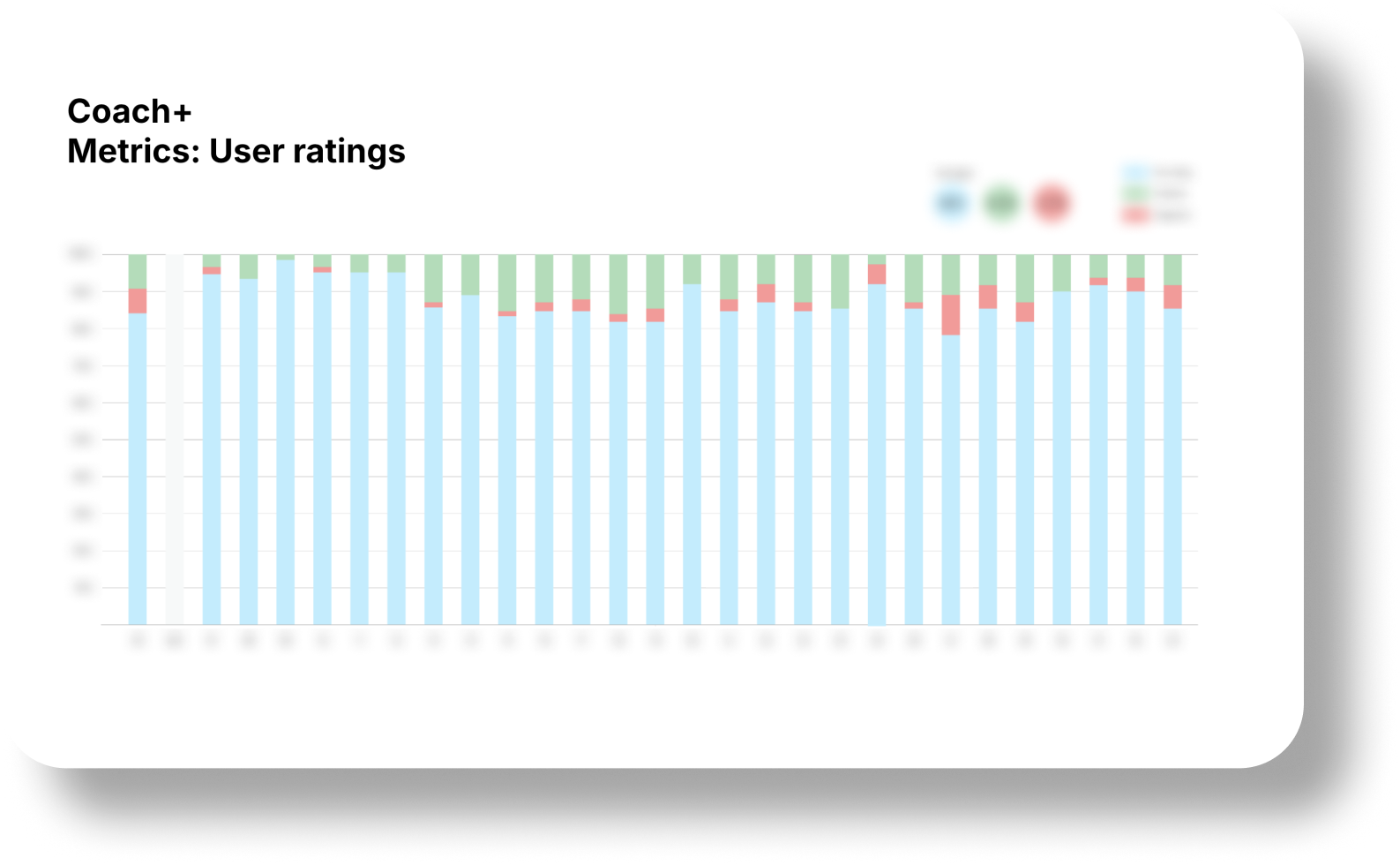

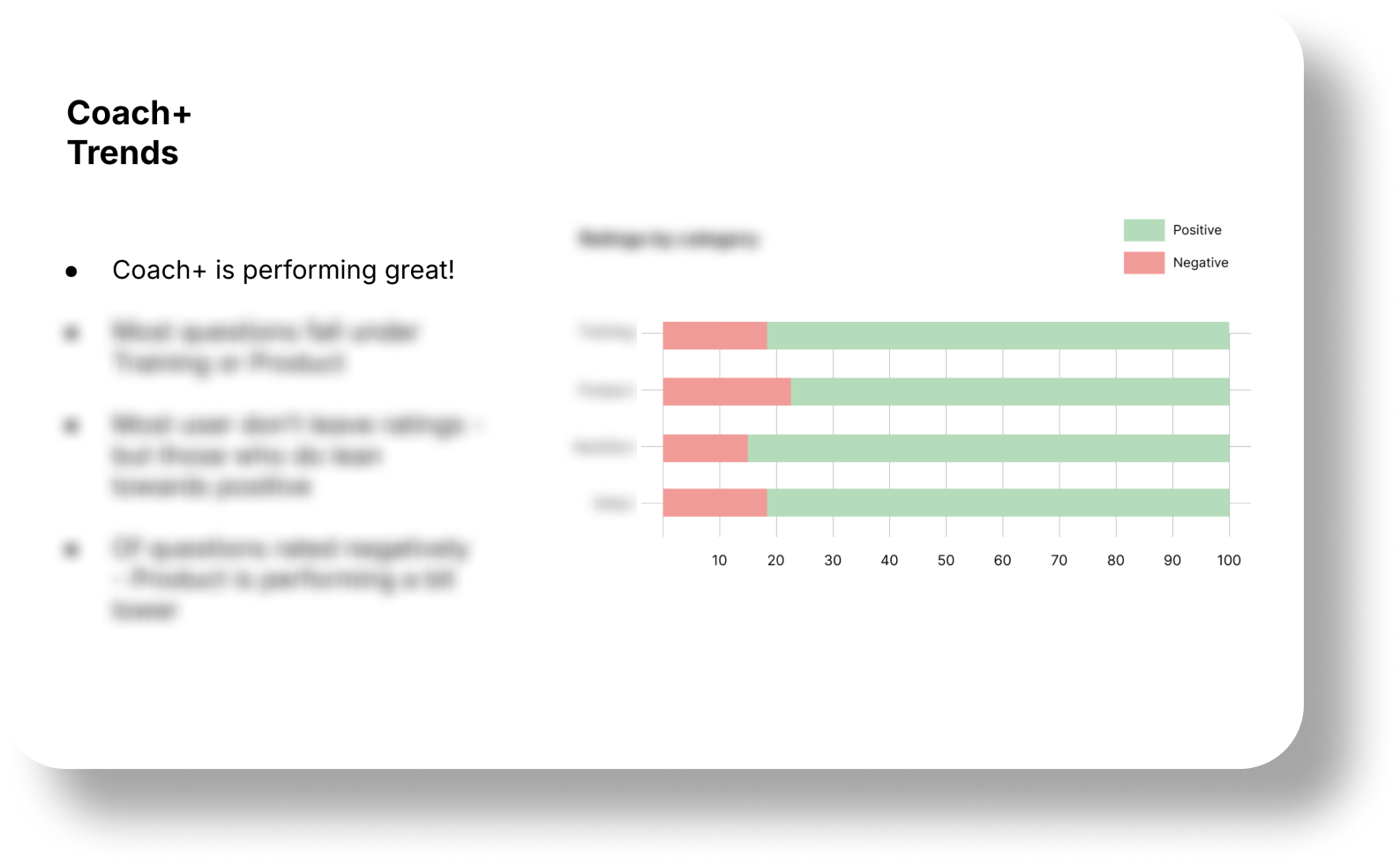

- Prioritized deliverables and outlined a QA framework for response standards

- Collaborated with developers, QA engineers, designers and data scientists to define technical needs

Training an LLM involves two key components: the preprompt—instructions defining the assistant’s behavior—and the custom corpus—a curated knowledge base the assistant draws from to answer queries.

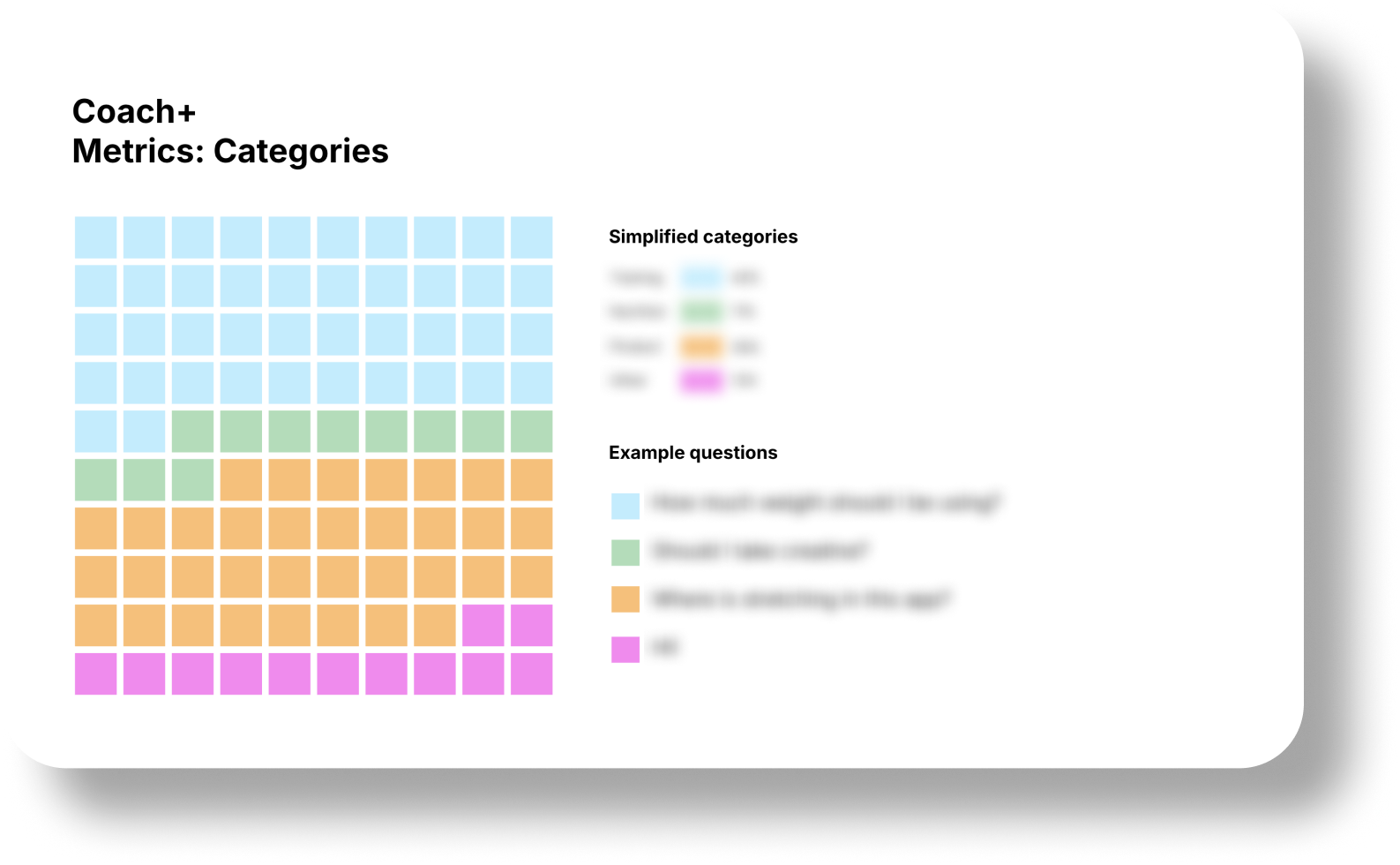

For the preprompt, we drafted a directive to ensure responses were positive and informative. For the custom corpus, I defined the scope of fitness knowledge required, starting by collecting common fitness questions users ask online to identify trends and build a list of high-priority topics.

Using these insights, I curated relevant articles from the Freeletics blog, selecting content aligned with our goals and feeding it into the LLM. For the most frequently asked topics, I wrote dedicated articles providing clear, actionable advice in our brand voice.

On the product side, I conducted a comprehensive audit of the app, breaking down its features and sections to identify areas where users might need guidance. I then categorized and prioritized these topics based on their importance to new users. This structured approach allowed me to set clear milestones for the initial release, leaving time for quality testing and refinement.

-

🔍 Content Research & Curation

- Collected common user questions and concerns from multiple online platforms

- Audited the app to identify content gaps and prioritize topics relevant to new users

- Selected and created content for top fitness topics, including curated blog articles